Human-centred AI for an inclusive society

In 2021, the Dutch Research Agenda (NWA) launched a programme on human-centred AI for an inclusive society. Research in this programme focuses on the development and application of trustworthy, human-centred AI. To conduct this research, the NWA supports various ELSA (Ethical, Legal, Societal Aspects) Labs.

Each ELSA Lab consists of a consortium of public and private organisations, companies, civil society parties, and supervisory bodies. Together with both citizens and the government, the ELSA Labs work on human-centred AI solutions based on concrete cases. These solutions should be both generalisable and scalable.

In 2022, five ELSA Labs were awarded funding by the NWA. Among these was the ELSA Lab Defence, of which I am a member. The ELSA Lab Defence focuses on the design, development, and application of trustworthy military AI. Our use cases encompass early warning systems for countering cognitive warfare, (non-lethal) autonomous robots, and decision-support systems.

These AI-based systems could greatly support the Dutch military, but ethical, legal, and societal challenges require that they are developed and deployed responsibly, in a way that safeguards human values. For this reason, the ELSA Lab Defence works on human-centred AI solutions in the military domain.

First national ELSA Labs Congress

As a member of the ELSA Lab Defence, I attended the first national ELSA Labs Congress on the 1st of December, 2022. In this congress, all 22 ELSA Labs came together to exchange ideas and strengthen the ELSA Labs Network. What I remember most of this day is the presentation by Lisa Brüggen on the official opening of the ELSA Lab Poverty and Debt. Up till this point, I had not read anything about the other ELSA Labs, so I only knew what we, from the ELSA Lab Defence, intended to accomplish.

As someone new to TNO, this concept of the ELSA Lab Defence was innovative and unique to me. I believed this… up until I saw the presentation on the ELSA Lab Poverty and Debt. It was at that point that I discovered two things.

Firstly, all ELSA Labs have the same motivating drive. Secondly, we all kind of want to do more or less the same thing, albeit in different contexts. This grand idea of developing an “ELSA Methodology”, that I thought was unique to the ELSA Lab Defence? Well, it wasn’t so unique.

That being said, what I also noticed is that, despite having similar goals, no one at this first national congress really seemed to know how to accomplish this goal. I didn’t know what an “ELSA Methodology” would look like, but then, neither did anyone else. In this blogpost, I attempt to take a first stab at what an ELSA Methodology might entail, inspired by concepts from the field of Responsible Research and Innovation.

The ELSA methodology lifecycle

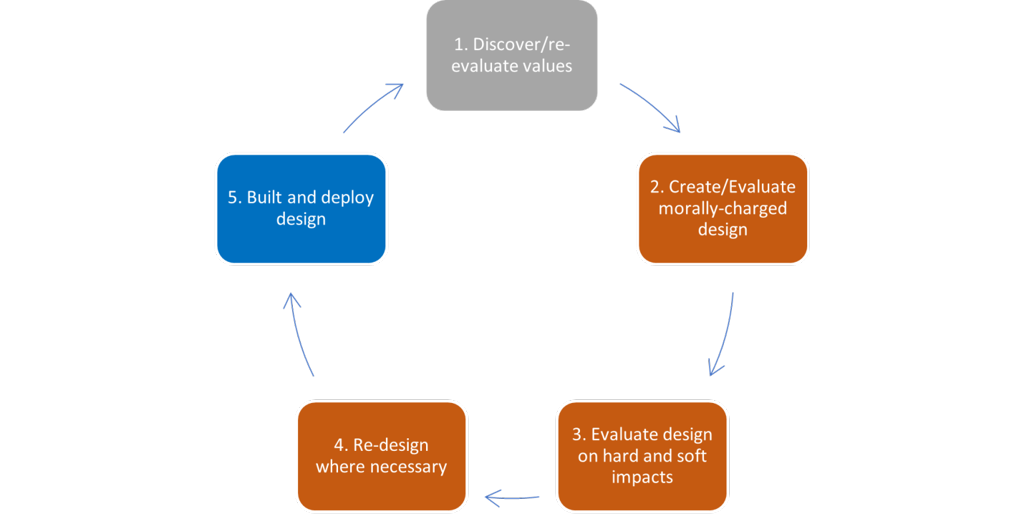

Figure 1 shows a first draft of a design methodology that aims to be responsible and incorporate ethical, legal, and societal aspects. The design methodology consists of three identified phases: research (grey), development (orange), and deployment (blue). In this way, the methodology intends to cover all aspects of the innovation process associated with AI-based applications.

Figure 1: First draft of an ELSA Methodology

Identifying values

In the first phase, research, we begin by identifying the values that are important for the AI-application. What these values look like depend on the context in which the application is being developed. In the case of ELSA Lab Defence, important values might be safety, security, and freedom.

For identifying the values, it is important to be inclusive and to apply different methods. Example methods for identifying values are: using existing frameworks (e.g. the European AI Act), conducting interviews, organising workshops, and engaging in observational studies.

An important and relevant question for whichever method is chosen is the question of who to invite to the table. It is important to be inclusive, so that the values represented are those of the many and not the few, but inclusivity does not mean inviting everyone. It is good to be critical here of who the public is that will be affected by the AI application and which actors are involved in its design, development, and deployment.

Designing ELSA-sensitive innovation

In the next phase, the values gathered in the first phase are incorporated into an initial design. Practices from value sensitive design can be useful in this phase. Specifically, the values in phase one can be translated to norms, which are then translated to design requirements as per the values hierarchy.

In designing a values hierarchy, there is no correct answer. There is no one specification to go from a value to a design requirement. Instead, it is important to document the choices made and their justification. In this way, others can easily review how identified values were taken into account for the final design.

After creating a first design, the design should be evaluated on both its hard (quantitative, direct cause) and soft impacts (qualitative, less directly linked). The most difficult part of this evaluation is, of course, that it is impossible to know in advance all the ways in which an application might impact society. In this phase, it is less important to foresee everything accurately and more important that you have thought about the possible impacts and have factored these impacts into your design. Hence, the fourth step of re-design.

From design to deployment

Finally, the design is ready to be built and deployed. In this phase, it is important that there is a good line of communication between the various partners that at this point might have worked on the AI application.

Builders of the application have knowledge on how to best turn designs into a realistic application, and designers know best which aspects of the design are and are not vital. Marketing can, in turn, learn from the previous phases what the important values are that the design takes into account and sell the application accordingly.

Importantly, the design methodology is cyclical rather than linear. Since values are dynamic, they will have to be regularly re-evaluated to see if the values have changed and if the application then still adequately responds to these values. If necessary, a new version of an application can be released to better incorporate the shifting values.

Building a responsible future together

Figure 1 provides a first draft of a possible ELSA methodology, and in the rest of the blogpost, the various steps of the methodology are expanded upon. I would be remiss to suggest the methodology conceptualised above is anywhere near exhaustive or complete. As per Heidegger’s view on methods, any methodology can only be developed dynamically and in tandem with others.

That being said, if for no one else but myself, it helps to think of the ELSA Methodology in terms of actions that we need to undertake to move in the direction of responsible artificial intelligence. It helps to know it can be more than a buzzword that provides empty promises. It can be something tangible. Something executable. Something the ELSA Lab Defence (and all its sister labs) can and will accomplish.

About the author

After studying Artificial Intelligence at the University of Groningen, Ivana sought out work where she could meaningfully contribute to the direction of AI technologies. She’s passionate about creating human-centred AI that aims to have a positive societal impact. At TNO, she has been given the opportunity to help shape the future as a scientist innovator. She’s involved with several projects related to the responsible innovation of Artificial Intelligence, such as the ELSA Lab Defence. LinkedIn: https://www.linkedin.com/in/ivana-akrum